Sometimes I use a simple mnemonic to evaluate an organisation's cybersecurity maturity. It's not perfect. It’s a useful tool to spark meaningful discussions about their security posture. 1

It boils down to asking one question.

How does an organisation decide what technical controls to implement? In other words, what drives the decision to enable a mitigation?

There are roughly three different ways an organisation builds practical cybersecurity:

- Incident-led : Reactive approach - Focus on security after an incident. Everything is on fire all the time

- Controls-led - Compliance based - Follow a control framework to the letter on every decision

- Risk-led - Contextual - Justifiable decisions to mitigate plausible threat scenarios under the context that the organisation operates

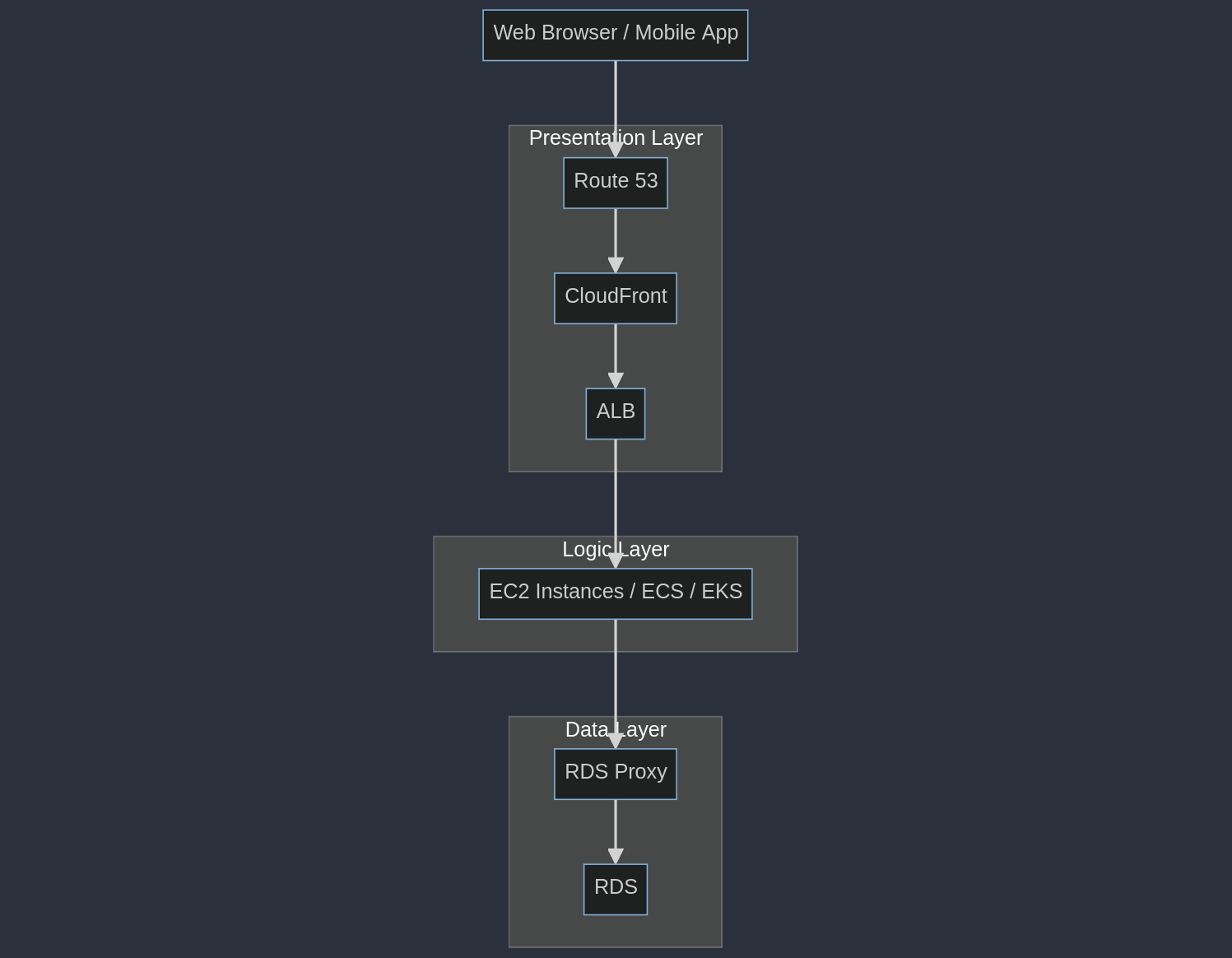

Imagine you're managing a typical 3-tier web application hosted on a cloud service provider like AWS. To secure that application, where do you begin?

Your first thoughts should consider business requirements, risk frameworks, or asking for more context.

Let's build some context. This is an e-commerce web app, hosted in AWS. Even if you're not familiar with AWS, follow along.

The decision you need to make is whether you need to segment this web application from the staging environment or not.

I used the word need, because the application can definitely work and deliver value, without any segmentation. Who is to say that we need to segment stuff2 ?

Incident-led

An incident-led approach is typically driven by external pressure—either from auditors, regulators, or after a security incident. Security changes happen most likely after something has gone wrong. A security capability is found to be lacking.

The organisation needs this security capability or you otherwise risk a licence or a fine. There was no prior consideration, but external forces drive decision-making. We can sprinkle knee-jerk reactions and constant firefighting.

The need for the capability is driven by a past incident that caused downtime or a fine. A vendor came in and promised to do everything and then more. Maybe the organisation bought into Software Composition Analysis because in 2021 they didn't realise they were running Log4j.

Back to our example. Do we segregate our staging and production environments ? No.

Is there any sort of compartmentalisation? No.

We start a programme of work to do this, after a developer accidentally deployed to production instead of staging. Get very specific requirements from a third party and try to deliver those to a T.

The requirements focus on capabilities and the answers are often a security product. Usually at this point there is a Request For Proposal sent out to vendors to try to match and build that capability. After purchasing the capability, it's revealed that it doesn't quite fit the mitigation that the organisation had in mind.

The collective understanding of what the organisation is trying to achieve is lacking.

We now have a firewall appliance and have split the network into two, but the appliance doesn't work with anything else in our network. We will probably have to pay the vendor to operate it and in order to promote workloads from staging to prod, the appliance pretty much allows all to all between the "two networks" so it's just an extra hop now. We don't want to break stuff.

It's cheaper short-term than tackling the root causes, so we'll follow a capability model of what the vendor offers.

Next year we'll buy a better capability and possibly pivot to another area.

The direction of this type of organisation will be to go cybersecurity shopping every year, buying products to fulfill their missing capabilities, largely reactionary.

The next step is usually enquiring on how to build a structured approach to cyber security. Stop being driven by external priorities. However, it tends to focus on panacea seeking solutions. That one product that can really help solve that problem. Because it's almost always the problem of the product - "we bought the wrong thing".

By the time the organisation reaches a pivot point, an external force resets the cycle, and the whole thing starts again.

The overall approach is binary and vendor-led. Overall it represents the lowest form of cybersecurity maturity, and puts a lot of pressure to the people on the ground.

Controls-led

In a controls-led approach, the organisation follows predefined frameworks dogmatically.ISO27001, SOGP, NIST, CIS Critical Security Controls, etc.

Pick any of them, we'll find something like ABC-10: Segmentation and Subnetworks. Aha! It says:

"Define segmentation, label your stuff with classification, build subnetworks, think of regulators and risk.".

-- Your favourite control framework

We can do this. We draw a line on our diagram. If customer data goes down a path, we'll call this production. If customer data doesn't go down that path, it's... not production.

These two paths never merge. We'll put them in different VPCs, or maybe even different accounts. Great. Alice though will still have access to deploy to both environments from her machine. Maybe we'll stop Alice, but we can't "stop" Jenkins.

Now we've got segmentation. Except the things that need to talk to both environments at the same time like security scanners, "break glass" access, CI/CD pipelines, observability tooling, that ETL job that anonymises data from prod to dev every Friday so we can run analytics, and those two components that point from prod to test because if we migrate them, we might break everything, and they are way down our backlog.

Upon review that might not quite fit with what the frameworks are prescribing, or it might. In general there is nervousness about the approach, but the debate never settles. There isn't an established agreed position, and the solution is to mostly read further on the scripture.

An organisation like that struggles to understand why segmentation is or will be important to their business, but they have a north star to follow.

The direction of this type of organisation is that it constraints itself arguing against the usefuless of a control vs the requirements from the control catalogue.

The approach is unfortunately self-limiting as the decisions that need to be made are also binary, like our incident-led approach, albeit they are better informed and not reactive. If there is a relevant control to your application, then you must apply that control. There is no gradient, it's either on or off.

The organisation tends to associate a control that's enabled as compliance and a control that isn't enabled as non-compliant. Compliance drives security. If a decision doesn't make sense from a security perspective, we'll still argue the potential compliance merits, imagining requirements from mythical regulators. Phrases like "defence in depth" without corresponding attack trees or threat models, are very popular.

Context is not included. Context specific decisions are difficult to make. First turn everything on, then ask questions. Cybersecurity becomes a burden and a tick-box exercise. It's sometimes performative or ceremonial security.

Risk-led

The risk-led approach starts by asking why. In contrast to controls-led, it starts with understanding the context and qualities of the system.

Maybe it's Shostack's 4 question frame for threat modeling, or it's just asking "what's an example where someone could have been in a better place if they have would segmented their network" ?

Is this a property of the system that would help its resilience ? Is the cost of this mitigation worth its implementation for this system?

The organisation understands the regulatory requirements. Where they need to apply controls indiscriminately. Sometimes go as far as to argue against them, as the regulator's recommendation is lagging behind current understanding.

The approach goes back to providing the context for the requirement. It tests the assumptions we make of the system that we're trying to protect. It validates the security properties and principles we're trying to uphold.

The organisation understands why intelligence-led operations are important.

Why resilience and stressed exit plans are part of your regulatory requirements.

Following our example. We can justify why there is a need separate our staging environment from our production environment. We apply the same controls across the environments. People don't have direct access to data, definitely not to prod. We have confidence in our CI/CD and our deployment is the same across environments. Our app server in test won't have authentication credentials to the prod database, or vice versa.

The direction of this organisation is that it makes justifiable decisions to mitigate plausible threat scenarios under the context that they operate.

Product comes first and security is an enabler.

Decisions are not binary and tradeoffs are understood, and captured. In 6 months time we might have to make a different decision. That's fine, the system is changing and we need to adapt. That's the core part of resilience after all.

What's next

Next time your organisation is about to implement a technical control, stop and ask. Why are we really doing this ?

It might help you assess where you stand and the drivers of your cybersecurity programme.

This model can also be useful, aside from being wrong.

Before you bring up your favourite control catalogue, risk framework, or public breach/anecdote, that's the point I'm making.