Check out Clotho and a working example using Squid

In the realm of public cloud computing, the necessity of utilizing public endpoints is an inherent characteristic — it's what defines the "public" in public cloud.

Consider this scenario: It's Monday, and you're logging into Outlook on the web (OWA) to access your work email.

Interestingly, the same URL that grants you access to your work account also allows you to log into your personal account. You have the capability to transfer data directly from your work laptop to your personal email. You simply open a new tab, login to your personal email and upload all your work files.

At first glance, this might seem like a convenient feature. However, it's precisely this type of activity that a ~$2bn market is trying to mitigate. Data Loss Prevention (DLP) solutions are designed to detect such unauthorized data transfers or exfiltration events.

A DLP solution would be able to spot an exfiltration event from your work email to your personal email. The responsibility falls under the email provider. The email provider needs to provide the security controls to prevent or detect exfiltration events.

However, that's not your path. You're uploading data to a trusted vendor endpoint, from a trusted work device, in a trusted work network, to your own email.

You're after all, login in to Outlook, there is nothing malicious. The responsibility falls under your own network and devices.

The endpoint serves everyone; therefore, if you decide to email yourself a spreadsheet, the responsibility for preventing or detecting this event, falls under your own network and devices.

We need a way to prevent an attacker from accessing their email, from our network, whilst allowing access to the endpoint.

Instead of email, think about any service from a major public Cloud Service Provider (CSP).

There are two ways to connect to CSPs services. Establish a connection over the internet or over Private Link.

In AWS specifically, some private endpoints do not support policies and some services do not support endpoints at all 1.

We need access to AWS endpoints, sometimes over the internet. Sometimes it's an S3 bucket that has a picture of cat. This is how a chunk of the web still works, by having intentionally publicly readable S3 buckets.

It follows that over the internet, an attacker can access their S3 bucket - or other service with no endpoint or endpoint policy - from our network, using their account credentials, and we won't be able to prevent or trivially detect the event.

The risk becomes apparent when:

- We place people next to data2 - i.e. an insider risk.

- An attacker is in our network with data and wants to get out.

It's a challenge to not place people next to data. Once achieved, it removes a significant amount of risk from the system and the organisation 3.

Insider risks are well known4, especially in large organisations.

When we PUT an object in an S3 bucket, over the internet, our request would be to one of these endpoints. How can we identify that the PUT happens in our S3 bucket and not the attacker's ? In other words, how do we stop cross account/tenant/organisation access over the internet ? How can we prevent an attacker from inside our network, accessing cloud service public endpoints?

In AWS, Azure, and GCP, this is a known threat. Azure and GCP share a similar approach and enable you to put tenant/organisation restrictions in place. Here's how they work:

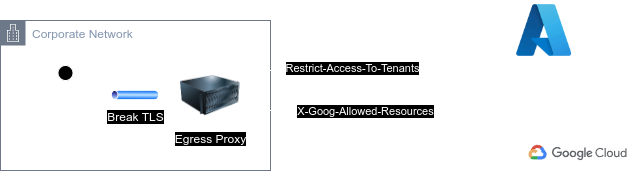

The traffic sent from anything inside your corporate network, must go via an egress proxy before it reaches the public endpoint of the CSP.

The proxy will "break" TLS, inject a custom HTTP header and forward the request to the CSP's public endpoint.

The HTTP header that will be injected contains the unique IDs of the corporate tenants. In other words it's an allowlist. If the request tries to access resources or authenticate to anything other than the allowlisted tenants, it will get denied.

Which means that we don't have to do any further inspection into the credentials or the type of the request that was sent. All we need to do, is get a list of our tenant/organisation ID, force everyone to go via the egress proxy - probably already doing this - and we've just mitigated our cross-tenant/org threat.

Azure and GCP, provide more granular capabilities with Azure tenant restrictions v2, GCP organisation restrictions and Google consumer domain blocks.

What about AWS ? It's complicated. AWS launched Management Console Private Access. You build a VPC in an AWS account and create two new VPC Endpoints - console and signin. Then you force all of your corporate users traffic to these VPC endpoints instead of the public endpoints.

There are problems with this approach.

- It doesn't support API access. We're still not solving the issue of services that lack VPC Endpoints or VPC Endpoint Policies.

- It complicates the setup when we need both console and API access.

- Supported regions and services are not 100% there.

Which is why I made Clotho.

Clotho

Clotho aims to be a fast and secure AWS sigv4 verification library, written in Rust, that can be used as an external authoriser with your favourite forward proxy.

It aims to act like the Azure and GCP solutions in AWS, but without the need to inject any headers or alter the request in any way.

Why do you need this ?

Security - You can filter sigv4 based on account IDs, regions, or AWS services. For example your internet egress traffic can be limited only to your AWS account. This is very important especially for AWS services that do not support VPC endpoints.

Cost savings - It can be cheaper to run vs VPC endpoints

Clotho won't apply any IAM policy checks depending on your IAM identity. Clotho will simply parse the Authorization header and apply an allowlist check based on the account, region, or service.

Before I continue on how Clotho works, we first need to understand what's in a typical AWS API request, and specifically the authorisation part, sigv4.

Here's an example Authorization header of an HTTP request to the AWS STS public endpoint API using the sigv4 scheme.

Building this request is an offline operation. All you need is an aws_secret_access_key, the region which you intent to send the request, and the service that you're operating.

1. "Authorization": "AWS4-HMAC-SHA256

2. Credential=AKIAWNIPTZABWMEXAMPLE/20240107/eu-west-1/sts/aws4_request,

3. SignedHeaders=content-type;host;x-amz-date,

4. Signature=03707c980d16f1eecdf36551112fa6244764079272b6bb5cd708a4bd25d43e1e",

Line 1: The algorithm used to calculate line 4, the Signature component.

Line 2 : Credential component. It includes the access_key_id, the date the request was signed, the region and service that the request is targeting, and the type of the signature aws4_request.

Line 3: SignedHeader component - describe the contents used to generate the signature.

Line 3 references HTTP headers that are not part of the snippet above, but are part of the request.

Clotho "verifies" the Credential component, against a provided allowlist.

Since the Credential component is part of the signature, if an attacker modifies any part of it, the verification of the signature will fail. Tampering of the Signature will equally result into the verification of the signature failing since it won't match the Credential component. Therefore a Monster in the Middle (MitM) attacker can replay a sigv4 request 5 but cannot tamper with it.

The first and primary information we need to take is to identify the account that owns the access_key_id. Normally this could be done by calling GetAccessKeyInfo. However, we can unpack the AWS account ID 6 from the access_key_id. This saves as network call to the STS endpoint. Our verification can take place offline.

We can then build an egress proxy that inspects any AWS API request, reads the Credential component of the Authorization header and compares the request to our own allowlist.

An allowlist in Clotho looks like this

accounts:

"581039954779":

"regions":

"*":

"services":

- "s3"

This will allow any request from account 581039954779, to any region, to the s3 service. If Clotho receives the following request ... Credential=AKIAIOSFODNN7EXAMPLE/20130524/us-east-1/s3/aws4_request it can unpack the AWS Account id from the access_key_id and make decision based on the allowlist. In this case the request would be allowed. You can clone the repo and run the example yourself.

I've included an example implementation in squid, and an implementation in Python's mitmproxy.

A back-of-the-envelope calculation comparing VPC Endpoints and Clotho, shows that Clotho could be cheaper. Here's a cost comparison, with the following assumptions:

- Running on us-east-1

- Everything runs across x3 AZs

- There is no intra region traffic when reaching clotho instances

- There are no egress costs for reaching out to AWS Services in the same region - Reference

- Clotho runs on graviton and the pricing is on-demand

| Traffic | VPCE Count | VPCE Price | Clotho Cost | Clotho instance type |

|---|---|---|---|---|

| 1 TB | 10 | $226 | $9.072 | t4g x3 |

| 1 TB | 20 | $433 | $9.072 | t4g x3 |

| 10 TB | 10 | $316 | $83.16 | c6gd x3 |

| 10 TB | 20 | $532 | $83.16 | c6gd x3 |

| 100 TB | 10 | $1216 | $1078.27 | c7gn.2xlarge x3 |

| 100 TB | 20 | $1432 | $1078.27 | c7gn.2xlarge x3 |

With Clotho you pay only for the compute cost. The vertical scale up calculation happens to accommodate for higher bursts. A VPC Endpoint can burst to 100 Gbit/s with sustained 10Gbit/s.

A t4g instance would cater for up to 5 Gbit/s, and a c7gn.2xlarge up to 50Gbit/s . Figures taken from ec2instances.info

Clotho is at a very early stage, and contributions are welcome.

References:

AWS Certificate Manager, AWS WAF, and Route 53 come immediately to mind.

SEC08-BP05 Keep people away from data. This principle extends outside of the cloud.

The Ice Cream Cone of Hierarchy of Security Solutions by Kelly Shortridge is a fantastic example. The less dependent your system is to human behaviour the better. An engineer creating a "builder" VPC with an admin-like role that has access to the internet, so that the platform can import certificates to AWS ACM is necessary, but risky. It doesn't have to be spreadsheets.

Depending on the nature of the request and whether it's idempotent or not. Not all AWS APIs are the same. The replay also has a time limit of ~15 mins